As the KPMG study reveals, more than 60% of people are cautious about AI systems, showing a significant level of skepticism. Meanwhile, the Salesforce research highlights that 54% of the surveyed global knowledge workers don’t trust the data used to train AI. 68% of them are reluctant to adopt AI at work. With the rise of sophisticated AI agents, the issues of trust and AI transparency are becoming especially pressing.

What are AI agents? These are systems that can analyze different factors, make decisions, and take actions to achieve specific goals. All of this can happen without direct human intervention. Nevertheless, the fairness, ethics, and security of the decisions made by such systems are often questioned.

In this blog post, we will explore the key ethical challenges of modern AI systems and how to address them to ensure security and credibility of AI agents.

AI Governance: Core Ethical Challenges of AI Agents

Ethical challenges can significantly complicate the adoption of AI agents in security-sensitive domains, including finance, insurance, healthcare, and many others. That’s why, if you’re planning to implement such a solution, it’s essential to consider the potential pitfalls.

Transparency and Explainability Concerns

One of the main concerns is the fact that many AI systems act as so-called black boxes. They make decisions without revealing any clear reasoning to users and developers.

The more complex the agentic AI model is, the harder it is to understand how and why decisions are made. Especially in the case of deep neural networks that often involve millions of parameters.

Can simpler models address such challenges? Definitely not. While simpler models are easier to explain, they often cannot handle the complexity or achieve the advanced capabilities required for sophisticated agentic AI tasks.

Why Transparency and Explainability Matter

While some might argue users don't need to understand the 'magic behind the scenes' if AI agents deliver results, this overlooks the tangible real-world consequences that arise from opaque decision-making. Transparency and explainability are crucial because they directly impact:

- Accountability. Not knowing what and who stands behind the decision, it will be practically impossible to make someone accountable for the damage in case it leads to a failure.

- Trust. Without understanding, user confidence erodes over time, potentially undermining the entire value proposition of the AI agent.

- Compliance. Legal regulations (like GDPR) demand explanations for autonomous agentic AI decisions that have a direct impact on individuals.

Bias and Discrimination

AI systems often learn from historical data. Such datasets may reflect existing societal biases that AI models can amplify. This can lead to discriminatory outcomes against certain groups based on many factors like race, gender, age, or socioeconomic status.

For example, AI-powered hiring tools may downgrade résumés with female names when evaluating candidates. At the same time, due to the same issues, loan approval systems often reject applications from clients who represent minority groups.

What factors may be a reason for bias?

- Unrepresentative datasets used for training. Training data does not always accurately demonstrate the diversity of the population.

- Human subjectivity in labeling. Human annotators may unintentionally introduce their own biases during the process of data labeling.

- Lack of diverse perspectives at the development stage. Homogeneous development teams may overlook fairness issues, especially in cases related to underrepresented communities.

- Poorly defined objectives. An exclusive initial focus on maximizing accuracy metrics can result in ethical considerations being undervalued or insufficiently addressed during development.

How to Solve AI Transparency Issues

Today, the introduction of transparent and explainable AI agents is one of the key priorities for development teams. Here are the most promising approaches and trends that are gaining traction today.

Explainable AI (XAI) Techniques

Explainable AI (XAI) can be defined as a set of methods and technologies aimed at making AI decisions more understandable to humans. This enhances user trust and helps developers identify flaws or unexpected model behavior more easily. In addition, explainability will also greatly boost AI compliance and ethical accountability.

Key Methods:

- Post-hoc explanations that include clarifications of the behavior of an already trained model.

- Visualization techniques help demonstrate which input features influence outputs.

- Natural language explanations are human-readable justifications for predictions.

Model-Agnostic Tools

These tools ensure explainability without accessing the internal “kitchen” of the AI agent. As a result, they can be used even with black-box models. Apart from increasing transparency, they also help developers debug their solutions as they show features that impact different decisions.

LIME (Local Interpretable Model-Agnostic Explanations)

LIME can explain how an AI model makes a specific decision. It slightly changes the input data and observes how the output changes. Then, it builds a simple model that approximates the complex model’s behavior around that particular prediction.

This method is useful for gaining quick, local insights into complex models. Nevertheless, it focuses not on the entire model but on individual predictions.

SHAP (Shapley Additive Explanations)

This approach is based on cooperative game theory (Shapley values). SHAP calculates the contribution of each feature to a model’s prediction and considers all possible combinations of features.

It can explain both individual and overall model predictions. Though it may be computationally intensive, it offers more consistent and theoretically grounded explanations than LIME.

Clear Standardized Documentation and Communication

Transparency in AI systems can also be powered by clear documentation and effective communication.

A standardized way to document AI models can greatly facilitate work with them. Google’s Model Cards are a good example of such documents. They include intended use, performance metrics, limitations, and potential biases, helping stakeholders understand the scope and risks of a model.

One of the peculiarities of AI agents is that not only tech experts but also users without any specialized background deal with them. Therefore, in many areas like healthcare, it is vital to make AI explanations understandable to non-technical users.

Visual explanations, plain-language summaries, and "what-if" scenarios help ensure trust, safety, and informed consent.

How to Mitigate Bias in AI Agents

There are several powerful strategies that can help you reduce bias in AI.

Increasing Diversity of Datasets

First of all, it is essential to enhance the quality and representation of the training data. Here’s what you can do.

- Decolonize data. You should question and correct the biases embedded in datasets. It is necessary to pay special attention to those biases that are based on dominant or privileged perspectives.

- Focus on synthetic data. If you do not have enough real information to support diversity within your datasets, you can create synthetic data. It includes artificial examples that can balance underrepresented groups in the dataset and improve the model’s fairness.

Algorithmic Fairness Metrics

Researchers use specific metrics to analyze and compare fairness across models. This allows them to make informed adjustments.

- Equal opportunity. This metric ensures that individuals from different groups have an equal chance of receiving a positive outcome (for example, approval of their application for a loan).

- Demographic parity. It helps achieve equal outcomes across groups. Nevertheless, this approach ignores many factors and can sometimes lead to accuracy deterioration.

Bias Auditing Tools

You can also leverage specialized auditing tools.

- IBM’s AI Fairness 360. This toolkit is designed for detecting and mitigating bias across the machine learning pipeline.

- Microsoft’s FairLearn. It focuses on fairness-aware model training and offers visualizations that highlight trade-offs between fairness and accuracy.

Common AI Security Threats and How to Address Them

When you are preparing for the launch of your AI agent, you should also stay aware of the possible security threats. Below, you can find the most common risks and the ways to mitigate their potential harm.

Adversarial Attacks

Attackers can introduce very subtle (often even practically unnoticeable) modifications to inputs that can lead to incorrect decisions made by AI models.

Such attacks are based on the fact that many models rely on complex, high-dimensional patterns. As a result, even small pixel changes or noise injection can throw off predictions.

For example, attackers can fool AI-powered surveillance cameras into misidentifying them as someone else. It is possible to do it by wearing specially designed glasses or a patch on their clothing.

Data Poisoning

The security of AI agents can also be compromised by data poisoning. In that case, malicious data is injected into the training set. Even a small number of extra inputs can shift decision boundaries.

These attacks are commonly used against spam filters. By poisoning the training data, it becomes simple to label spam emails as “not spam”. After that, future attacks can pass through undetected.

Model Extraction and Inference Attacks

Attackers can reverse-engineer or steal model functionality using public APIs.

This may result in intellectual property theft and exposure of sensitive data. For example, a model might unintentionally expose information about individuals as a result of inference attacks.

AI-Generated Disinformation

LLMs can be exploited to generate wrong information, like fake news, social media posts, or phishing emails.

This can lead to trust erosion, political destabilization, as well as financial and reputational damage to individuals or companies.

Mitigation Strategies

- Ensure differential privacy by adding controlled statistical noise to data. This will prevent the leakage of individual records.

- Conduct penetration testing on a regular basis. Simulated attacks will help identify and patch vulnerabilities in deployed AI systems.

- Train models to be resilient against adversarial inputs.

- Restrict access to models using the latest authentication methods and monitor them to detect misuse.

- Follow legal frameworks like the EU AI Act and ISO/IEC 27001 enforced in your region.

- Track and validate each model version (including training data and test performance).

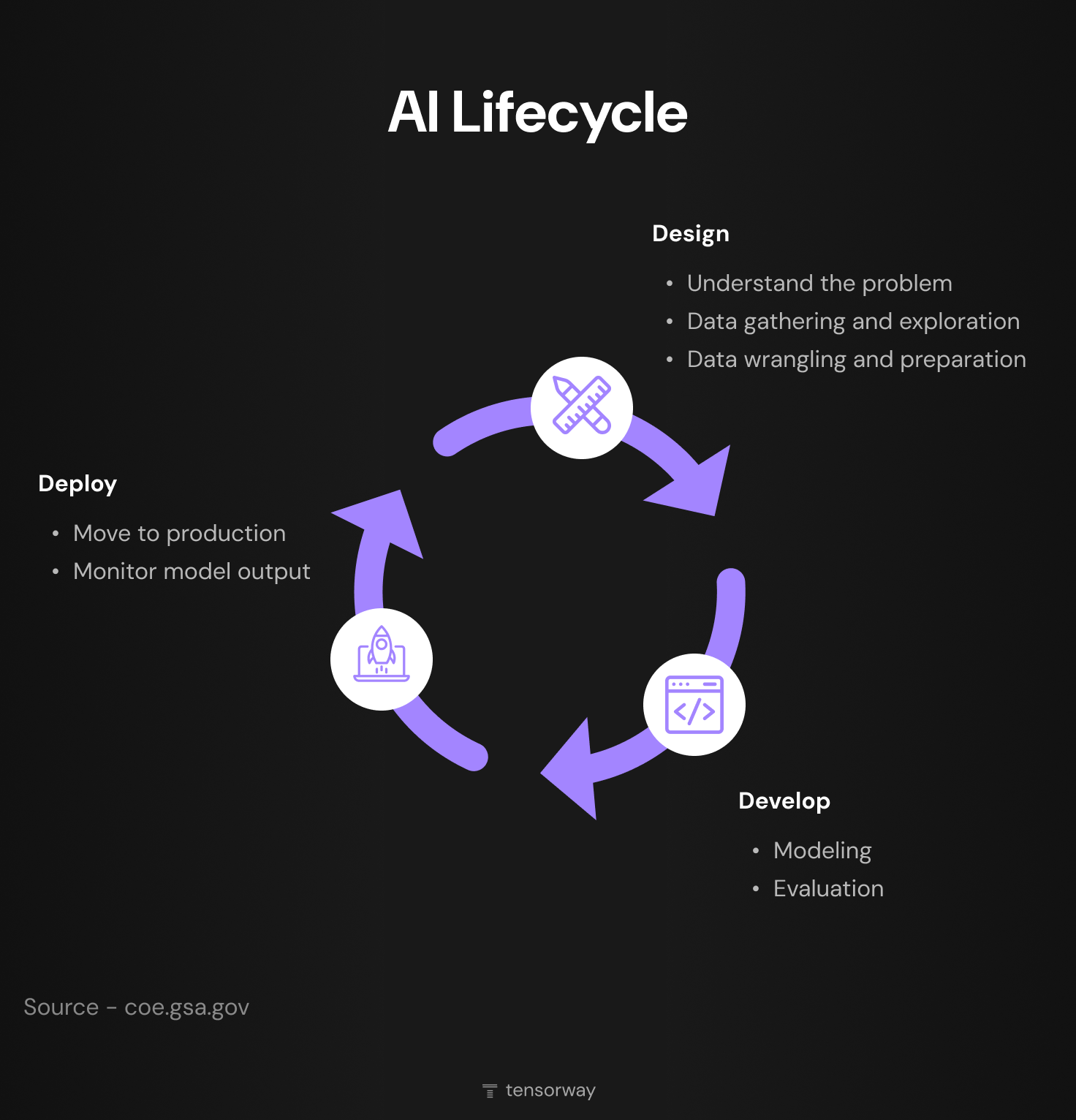

AI Security and Ethics across the Entire AI Lifecycle

Ethics and security are the core principles that should be implemented throughout the AI lifecycle.

A responsible AI strategy starts with design. The approach that includes embedding ethics and security from the first steps is often called ethics-by-design. It ensures that your AI agents, as well as the ideas and goals behind them, are aligned with societal values.

Design Stage

What should be done in the design phase?

Ethical Foresight

To spot potential risks, you can involve diverse stakeholders in your project. They will analyze your idea and try to predict its social impact.

If your system is likely to reinforce inequality or misinformation, it’s best to reconsider the idea and development timeframe..

Incorporation of Privacy-by-Design Principles

Apart from alignment with ethical values, it is also vital to guarantee AI compliance with frameworks like the GDPR.

- Minimize data collection (collect only that data that is really necessary for the task) and avoid collecting personally identifiable information (PII), if possible.

- Add statistical noise (it protects individual records but still preserves patterns).

- Ensure users are aware of what data is collected and how it is used.

- Allow users to withdraw consent and delete their data.

- Encrypt data both at rest and in transit.

- Rely only on secure APIs.

- Introduce role-based access controls.

- Maintain audit trails of data access and model interactions.

Risk Assessment

Even before your model is trained, you should project its potential misuse. Surfacing such cases, you can define methods to minimize the negative consequences of its application.

Development Stage

During AI agent development, you should continue keeping your focus on ethics and security:

- Assess your models with the help of ethical metrics like demographic parity or equal opportunity, and avoid discriminatory patterns.

- Apply explainable AI methods.

- Utilize privacy-preserving techniques (for instance, federated learning or differential privacy).

- Test your models across diverse user groups and scenarios.

- Define compromising cases.

- Stress-test models with adversarial inputs.

- Perform penetration testing of APIs or interfaces.

Deployment

Before deploying your AI agent, several steps should be taken to ensure its security and transparency:

- Allow humans to be involved in the process of making critical decisions.

- Establish recourse mechanisms (appeals, corrections, etc.).

- Secure APIs and monitor for abuse.

- Implement access control policies.

- Use AI-specific firewalls or rate limiting.

- Maintain continuous logging and audit trails.

AI Governance at Post-deployment Stage

After your system is deployed, it doesn’t mean that your work on its security is over. Continuous oversight is a must. Here are a couple of our practical recommendations:

- Monitor your system performance continuously to detect any emerging security threats.

- Detect patterns and behaviors that can be a reason for unfair or inaccurate outcomes over time.

- Make sure that your system corresponds to evolving ethical standards, newly introduced laws, and changing user expectations.

Wrapping Up

From education to healthcare, AI agents can make decisions in any industry that can directly impact humans. Given this, their ethics, transparency, and security become major concerns.

Adopting explainable AI techniques, using diverse datasets, and applying auditing tools can help teams build AI agents aligned with core human values.

By following the main ethics and security best practices, you can deliver solutions that will bring real value to their users and change approaches to solving many everyday tasks.

Already know how to use an AI agent in your business? Consult with our experts first to know it’s safe and secure for people and your company. Don’t hesitate to contact us now and get a project estimate for free.