In 2025, the global LLM market size is calculated at $7.77 billion. With the current trends in AI adoption, it is expected to hit the mark of $123.09 billion by 2034. This increase indicates a CAGR of 35.92% for the forecast period. The growing demand for business process optimization and automation is among the key drivers for the LLM market expansion. Industries like healthcare, e-commerce, and finance are heavily investing in AI solutions and boosting their adoption. As a result, the need for robust LLM frameworks to manage prompts, memory, agents, and data pipelines is on the rise.

In this article, we will explore the most popular options, including LangChain, LlamaIndex, AutoGen, and others. We will analyze their key features, strengths, and real-world applications across various business domains. This will help you better understand the peculiarities of the available frameworks and make the right choice for your AI project.

LLM Frameworks: Key Facts You Need to Know

Before diving into the details of the best LLM frameworks, let’s turn to the basics of this technology.

LLM frameworks are software tools, features, and libraries intended to facilitate the processes of development, orchestration, and deployment of apps powered by large language models. They provide structure and modular components to perform complex tasks that go beyond a single prompt-response interaction.

Different Categories of LLM Frameworks

Based on their functionality, it is possible to define several categories of frameworks. Nevertheless, these groups often overlap in practice. Let’s take a closer look at them.

Prompt Orchestration

Prompt orchestration frameworks provide the tools to structure, sequence, and manage interactions with LLMs across multiple steps.

Such tools allow for overcoming a standard process that includes issuing a single prompt and receiving a response. Instead, developers can efficiently connect prompts together, add conditional logic, and adjust components to make them reusable.

This is crucial for creating complex AI apps for different industries like chatbots, virtual assistants, or dynamic content generators.

Memory Interfaces

These frameworks allow LLMs to keep in memory previous inputs or outputs. As a result, interactions become more coherent and personalized over time.

Such components monitor conversation history, user preferences, and task states. These capabilities are essential for virtual assistants, tutoring systems, and customer service bots, where continuity is required.

Agent-Based Systems

LLM agent frameworks introduce reasoning and autonomy by enabling AI systems to act as decision-making agents. Agent frameworks enable LLMs to plan, select tools to execute particular processes, and collaborate with other agents to complete tasks.

Agent-based architectures are particularly useful for complex workflows and task automation. Such use cases are highly demanded across industries. For example, on our website, you can read about legal automation.

Data Pipelines

Data pipeline frameworks connect LLMs to external sources of structured or unstructured data. This is an important step to enable context-rich responses.

A major use case of such frameworks is Retrieval-Augmented Generation (RAG). In this case, a large language model needs to identify the most relevant information in a knowledge base or document store before generating a response. This greatly enhances factual accuracy and domain-specific relevance.

If you want to learn how RAG works in real-life cases in e-commerce, follow this link and read our article about this technique. Another practical example of using RAG is an AI tutor built by our team.

Top LLM Frameworks

For this article, we have chosen the best LLM frameworks to help you explore their functionality, as well as their strengths and weaknesses.

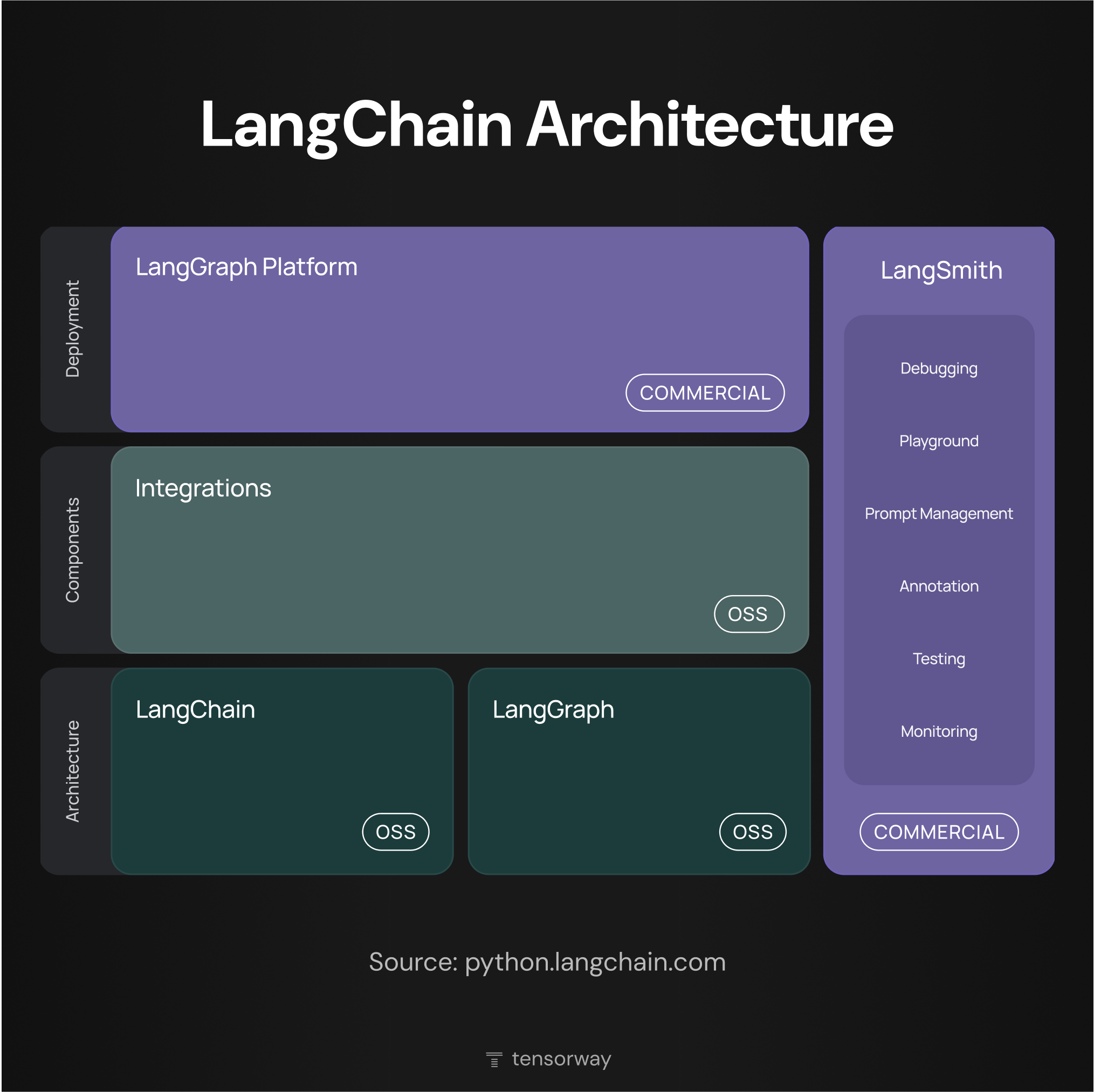

LangChain

It is one of the most widely used frameworks for building LLM-powered apps. It offers modular components that help developers orchestrate prompts, manage memory, integrate tools, and create autonomous agents for various business domains.

Core Components of LangChain

- Chains. These are modular sequences of LLM calls and logic steps, which allow for multi-step workflows.

- Agents. Such autonomous systems can choose the right tools and actions based on the user’s input.

- Memory. These components are interfaces that enable apps to retain and reference previous interactions.

- Tools. LangChain can integrate external functions or APIs. Thanks to this, LLMs can call them during execution.

Pros of using LangChain

- It is easy to compose, test, and reuse components across different apps.

- It has a large ecosystem, which ensures extensive integrations.

- The framework also includes support for observability, tracing, and async workflows.

Cons of using LangChain

- The learning curve is rather steep. A wide range of components can be confusing for beginners.

- LangChain can feel heavyweight compared to simple prompt engineering tools.

- Frequent framework updates can require reworking parts of the codebase.

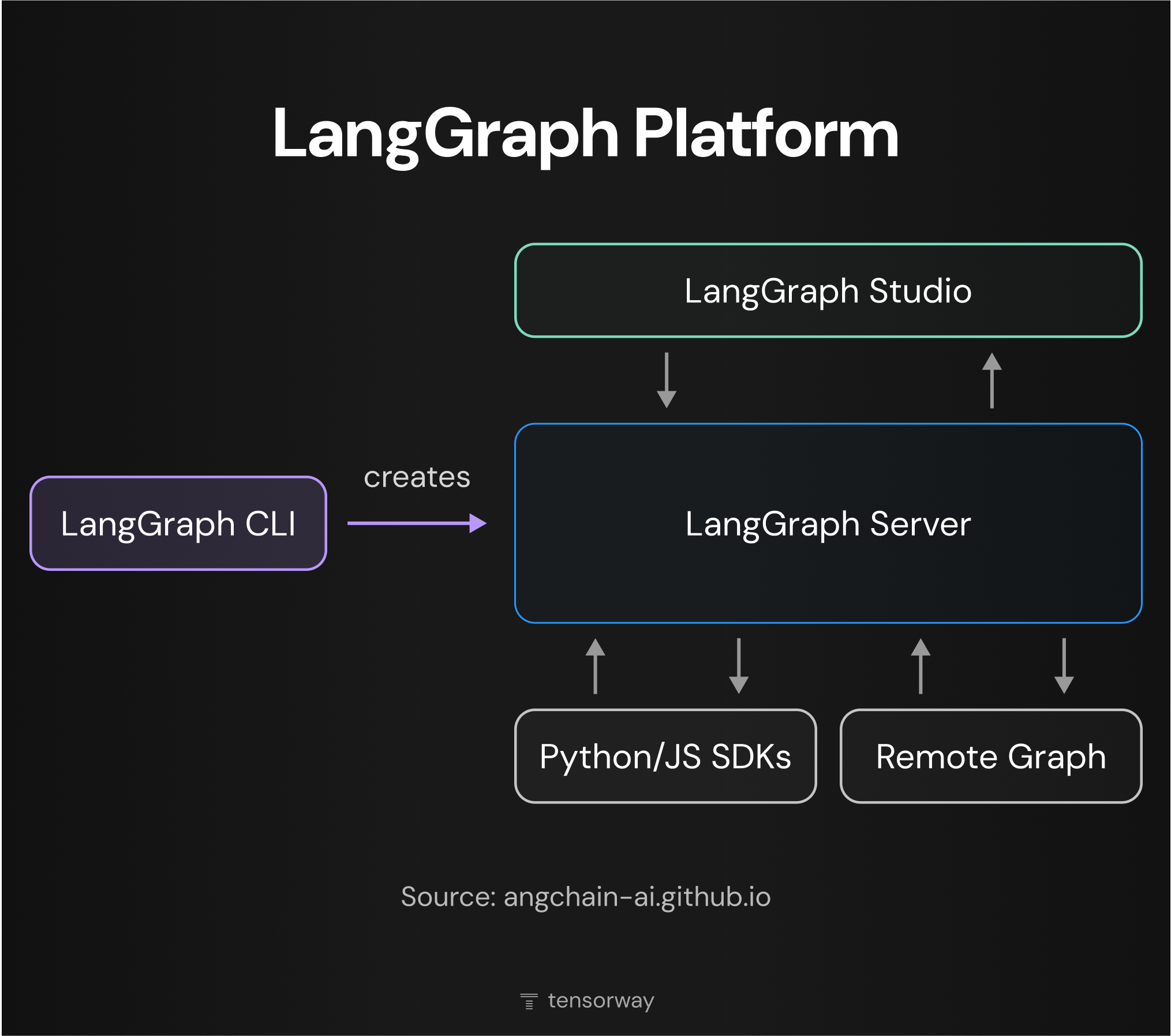

LangGraph

LangGraph is an open-source extension of LangChain. It is designed to support graph-structured logic flows in LLM apps.

With LangGraph, developers can define workflows as stateful graphs. This approach enables more dynamic control flows and complex decision-making logic. It’s especially beneficial for multi-agent coordination, tool-driven workflows, and long-running conversations.

Advantages of LangGraph

- This LLM framework enables branching, looping, and conditional execution in LLM applications. As a result, it helps to overcome challenges that are typical for linear chains.

- Built-in state handling ensures persistent context across multiple nodes and steps.

- LangGraph can be seamlessly integrated with LangChain components and tools.

- It is a good option for solutions that should include back-and-forth reasoning, retries, or collaboration between multiple agents.

Disadvantages of LangGraph

- As well as LangChain, LangGraph is known for its complexity. It’s much more difficult to create and maintain graph-based logic than linear chains.

- LangGraph’s community is smaller than the community of LangChain. It means that there are fewer tutorials and third-party integrations.

- Software developers who have never worked with graph-based programming models before may face a time-consuming learning process.

LangSmith

It is a developer tool designed to debug, monitor, test, and improve LLM-driven apps. It integrates seamlessly with LangChain and LangGraph. With its help, development teams can easily track prompt behavior and model performance. It’s especially valuable for those LLM applications that require strict quality control and collaboration across teams.

.png)

How a Team Can Use LangSmith: A Use Case Example

For instance, your team is creating a chatbot. In this case, LangSmith can be used for:

- Performing multi-step interactions across agents and tools;

- Debugging failed outputs;

- Running tests to evaluate prompt updates;

- Monitoring performance over time.

Benefits of Using LangSmith

- It gives deep insights into how LLM components behave in your real-world use cases.

- It greatly simplifies such processes as debugging, testing, and refining prompts and workflows.

- LangSmith supports prompt versioning, annotations, and shared debugging sessions.

- It is useful for monitoring performance metrics like cost, token usage, and latency at scale.

Pitfalls of Using LangSmith

- It is a commercial tool, which is not open-source. Its use presupposes additional costs. It can be a barrier for small teams and individual developers.

- LangSmith is primarily designed for the LangChain ecosystem. This limits its use with other frameworks.

- Teams may need time to learn how to use all the features effectively and achieve the highest results.

LlamaIndex

LlamaIndex is an open-source framework that enables RAG by connecting large language models to private or enterprise data sources. This LLM framework helps structure, index, and query your own data to let LLM generate more accurate, grounded responses.

LlamaIndex creates a data pipeline. Then, this pipeline:

- Collects data from various sources;

- Indexes this data into vector databases;

- Retrieves relevant information based on a user request;

- Augments the LLM prompt with the received context to generate a final reply.

Pros of LlamaIndex

- With LlamaIndex, LLMs become useful for many real-world business tasks that should be based on company-specific data.

- It works with many vector stores and LLM providers.

- It is a flexible and community-supported LLM framework. Developers can leverage frequent updates and integrations.

Cons of LLamaIndex

- Its performance depends on index quality. Poor metadata design can be a reason for irrelevant results.

- For its fully effective use, you may need to rely on vector stores, embeddings, and sometimes custom pipelines.

- Managing real-time updates and multi-tenant queries is typically not complicated for smaller apps, but these tasks can be challenging for large deployments.

AutoGen

AutoGen is an open-source LLM agent framework developed by Microsoft for creating multi-agent AI systems. With its help, multiple agents can communicate, reason, and collaborate in solving complex tasks. Each agent can be customized with specific roles and goals. Therefore, AutoGen becomes highly suitable for scenarios that include autonomous decision-making or task delegation.

Advantages of AutoGen

- It is a highly flexible LLM agent framework that supports various functions, including advanced workflows and iterative reasoning.

- It makes it easier to design complex, modular AI systems without excessive complication of prompt logic.

- Thanks to built-in tool integration, multiple agents can be equipped with custom tools, APIs, or external services.

- This framework is maintained and backed by Microsoft. This brings credibility and stability to it.

Disadvantages of Autogen

- When used for single-agent tasks or apps that don’t need coordination, it can add an extra layer of complexity.

- Agent loops or unclear objectives can lead to inefficient behavior.

- Developers need to implement safety and error handling to ensure reliability.

If you are also considering the launch of an agentic AI solution, it could be interesting for you to explore how our team coped with such a task. To find project details, follow this link.

Final Word: Quick Comparison of Top LLM Frameworks

All the above-mentioned frameworks have their specific use cases and strengths. To better demonstrate this, we have gathered the most important facts in the table that you can find below.

How to Choose the Best LLM Framework

Before integrating an LLM-powered solution, you need to perform serious analytical work. In the first step, you should evaluate your business maturity for LLM integration. Then, you will have to find the right tools and frameworks for your project.

To select the most appropriate option, you need to consider the following factors:

- Use case. For instance, for simple prompt workflows, you can choose LangChain; for RAG, it’s recommended to use LlamaIndex; for autonomous agents, you can take AutoGen or LangChain Agents. Meanwhile, LangGraph is a good option for graph-based logic.

- Integration needs. It’s essential to check the compatibility of a framework under consideration with your preferred LLMs and required external tools.

- Experience of your developers. You need to analyze the learning curve of each framework and verify whether your developers have experience with any of them. If you don’t have such expertise in-house, it could be sensible to turn to professional AI development services.

- Flexibility. Open-source frameworks like LangChai or AutoGen are flexible. However, they may need more setup. At the same time, commercial platforms provide managed services and support, but you will need to pay for using them.

Do you need help in choosing the best framework for your AI project? Or are you looking for a professional team to build your solution? At Tensorway, we are always at your disposal. Our developers have outstanding expertise in various domains and can address even the most specific business needs with the highest efficiency. Contact us to get more information about our services and successfully delivered projects.

FAQ

What makes Langchain’s chain architecture different from traditional LLM pipelines?

Traditional LLM pipelines often rely on a single prompt-response interaction. LangChain enables developers to build chains that can sequence multiple LLM calls and logic steps into modular, reusable workflows. It means that they can ensure complex reasoning, tool integration, and conditional logic, which makes it easier to build multi-step AI applications.

Are frameworks like Autogen helpful for small businesses, or are they tailored for enterprises only?

Originally, Autogen was designed to address the needs of scalable AI systems. But with its open-source nature and flexibility, such frameworks can be helpful for small teams as well. They can be used in prototyping AI assistants, automating customer interactions, or streamlining internal workflows. Nevertheless, some technical expertise, which not every small team has, is a must for initial setup and maintenance.

What ROI metrics help to assess the efficiency of the Langsmith implementation for LLM monitoring?

If you have decided to use Langsmith for monitoring LLM efficiency, it is recommended to track such metrics as error rates, iteration cycles, and improvements in user satisfaction. Some other helpful metrics are LLM latency, token usage, and prompt performance. To evaluate business impact, reductions in manual effort or customer support time can also be taken as indicators for ROI estimations.

.jpg)